IPI

Image Processing and Interpretation

TELIN department

Ghent University

Welcome

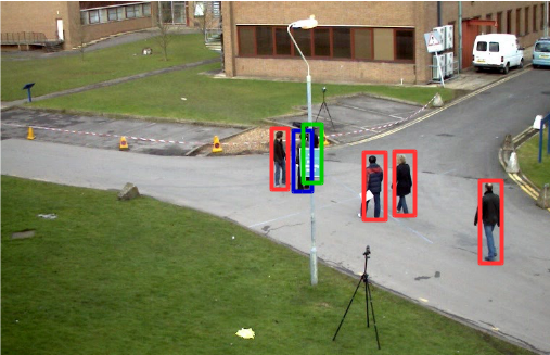

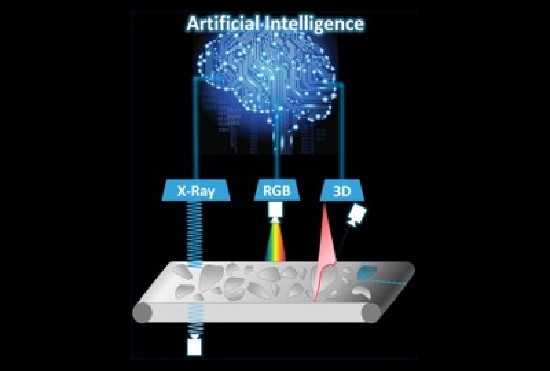

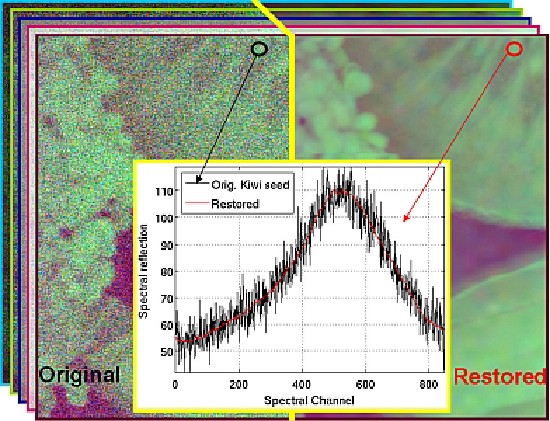

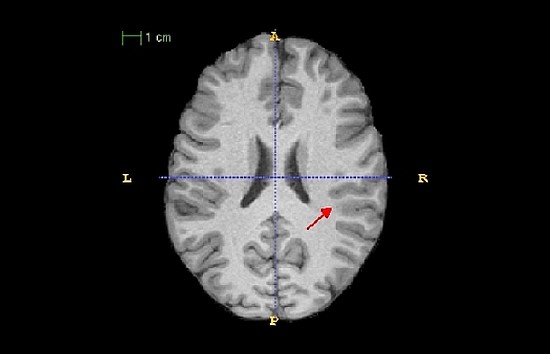

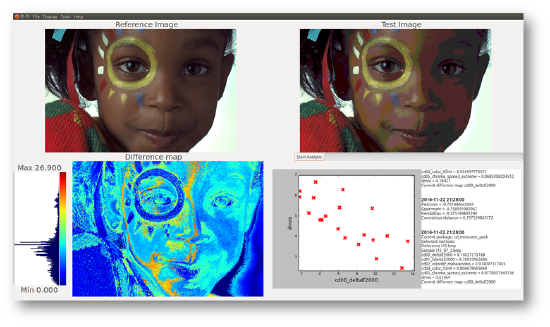

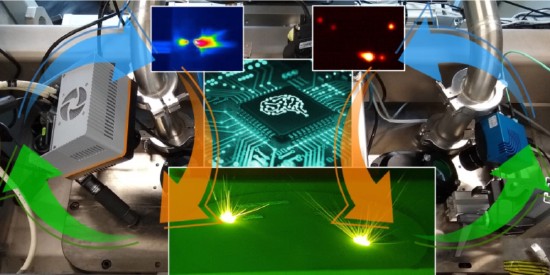

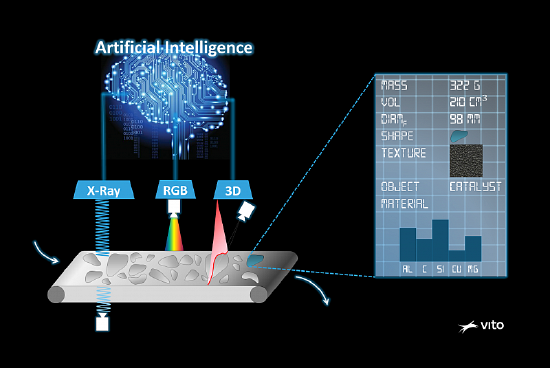

IPI researchers conduct state of the art research in the field of digital image and video processing for a wide range of applications. Our research covers computer vision, sensor fusion, remote sensing, image reconstruction and enhancement, 3D image and video enhancement and synthetic view generation, and several other related areas. We apply our technology to applications such as autonomous vehicles, drone and intelligent surveillance, agricultural and industrial inspection, (bio)medical imaging, consumer multimedia, and art.

Our group currently counts more than 40 researchers. You can find the full list of our team here. Our senior members are:

- Professors: Wilfried Philips, Bart Goossens, Hiep Quang Luong, Heidi Steendam, Jan Aelterman and Peter Veelaert

- Post-docs: Ljubomir Jovanov, David van Hamme, Danilo Babin, Michiel Vlaminck, Brian Booth, Martin Dimitrievski, Iman Marivani, Marwan Yusuf, and Mohsen Nourazar

- Technology developers: Ljiljana Platisa and Ewout Vansteenkiste

- Associated researcher: Wenzhi Liao

If you are interested in joining our group as a doctoral student, please visit the following page in English or in Dutch. Spontaneous applications are always welcome. Open positions are listed under the Vacancy tab on the main menu.

IPI is a core research group of imec.

Several of our members are associated with the UGent UAV Research Center and the UGent Artificial Intelligence consortium. IPI is the leader of the Intelligent Information Processing valorisation consortium I-KNOW and participates in the Hybrid Computed Tomography valorization consortium HyCT.