Topics

Networked sensors

- Sensor networks and methods for wellness monitoring of the elderly

- Collaborative Tracking in Smart Camera Networks

- Distributed Camera Networks

- Multi Camera Networks

- 3D reconstruction using multiple cameras

- Real-time video mosaicking

Scene and human behavior analysis

- Foreground background segmentation for dynamic camera viewpoints

- Foreground/background segmentation

- Automatic analysis of the worker's behaviour

- Gesture Recognition

- Behaviour analysis

- Immersive communication by means of computer vision (iCocoon)

- Material Analysis using Image Processing

Sensor networks and methods for wellness monitoring of the elderly

Addressing the challenges of a rapidly-ageing population has become a priority for many Western countries. Our aim is to relieve the pressure from nursing homes’ limited capacity by pursuing the development of an affordable, round-the-clock monitoring solution that can be used in assisted living facilities. This intelligent solution empowers older people to live (semi-) autonomously for a longer period of time by alerting their caregivers when assistance is required. We use low-cost, low-resolution visual sensors to do so, monitoring people 24/7 and gathering long-term, objective information about their physical and mental wellbeing.

The use of cameras to monitor residents in assisted living facilities or nursing homes is not new. Traditionally, though, these systems rely on high-resolution cameras to make sure all relevant info is captured. This approach is not only expensive (deployment and maintenance-wise), it also requires high bandwidths to transport and process the high-resolution video feeds. And it is often challenged from a privacy perspective too. Moreover, existing systems are generally ‘dumb’: they are not capable of monitoring (let alone interpreting) behavior or the evolution of disorders over time. We took a different approach by replacing high-resolution cameras with a distributed system of low-cost, low-resolution visual sensors to capture what is happening in a given premises – in a way that is privacy-compliant and using advanced algorithms to translate the input from low-resolution video feeds into valuable information about residents’ condition. With this approach, formal and informal caregivers get first-hand insights on physical problems, sleeping habits or eating disorders, or even the first stages of dementia – thus detecting hazardous situations before serious medical conditions take place.

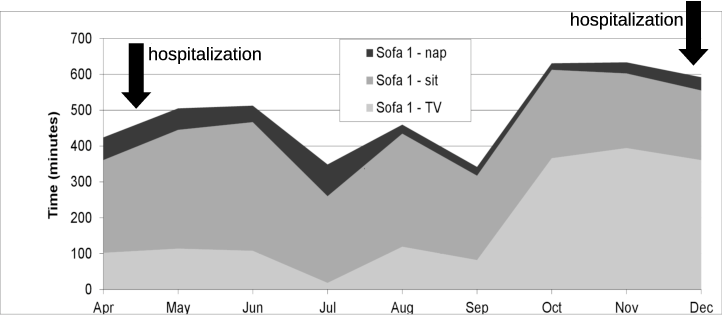

Figure 1: The ADL parameters performed on Sofa 1 from April to December

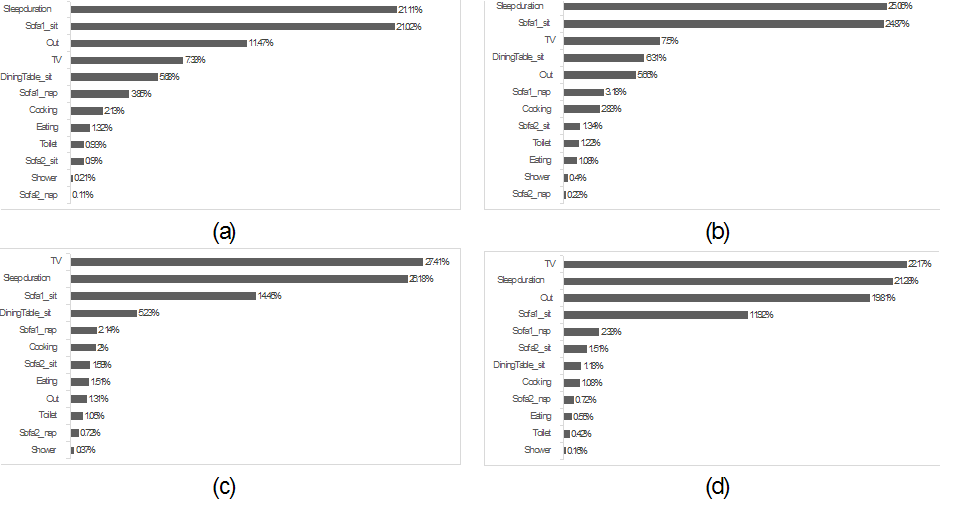

Figure 2: Activities of daily living of four months : (a) May; (b) June; (c) November; (d) December

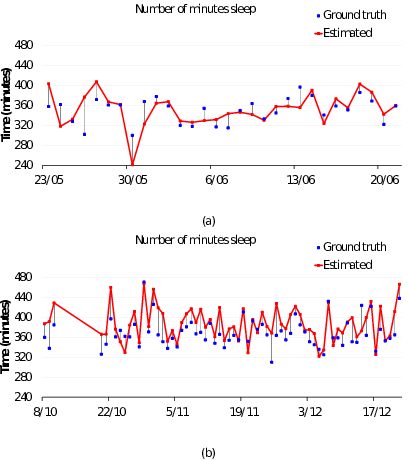

Figure 3: A comparison between sleep duration estimates and ground truth: (a) May and June, (b) October, November

and December.

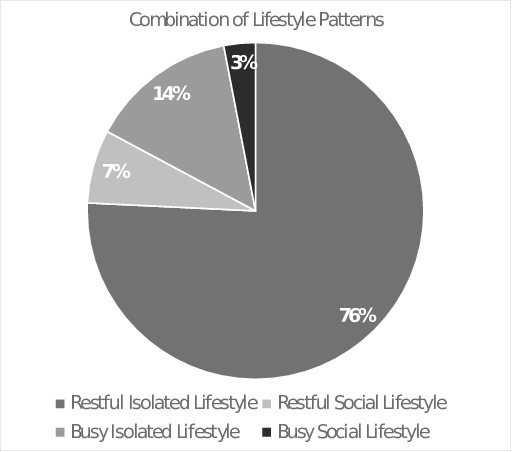

Figure4: Analysis of lifestyle patterns combination

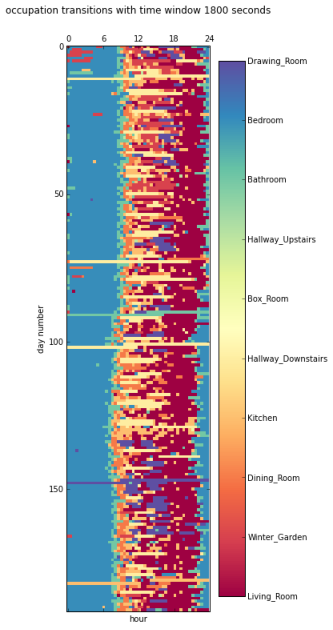

Figure5:The daily room occupation of the Surrey residence from PIR sensor data.

Contact: prof.dr.ir.Wilfried Philips

References:

Eldib, M., Deboeverie, F., Philips, W., & Aghajan, H. (2016). Behavior analysis for elderly care using a network of low-resolution visual sensors. JOURNAL OF ELECTRONIC IMAGING, 25(4), 1–17.

Eldib, M., Zhang, T., Deboeverie, F., Philips, W., & Aghajan, H. (2016). A Data Fusion Approach for Identifying Lifestyle Patterns in Elderly Care. In F. Flórez-Revuelta & A. Andre Chaaraoui (Eds.), Active and Assisted Living: Technologies and Applications. IET - The institution of Engineering and Technology

Eldib, M., Deboeverie, F., Philips, W., & Aghajan, H. (2015). Sleep analysis for elderly care using a low-resolution visual sensor network. Lecture Notes in Computer Science (Vol. 9277, pp. 26–38). Presented at the 6th International Workshop on Human Behavior Understanding (HBU 2015), Springer International Publishing.

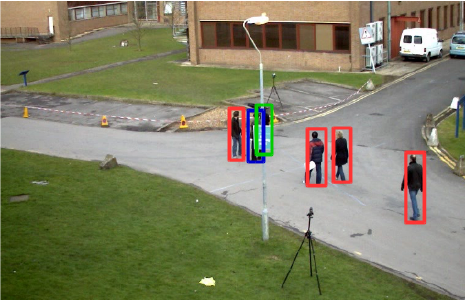

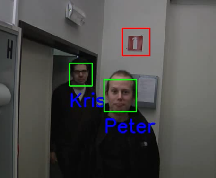

Collaborative Tracking in Smart Camera Networks

Visual tracking of multiple people in an uncontrolled environment is very challenging tasks due to the non-rigid nature of the human body and object?person/person?person occlusion. As multiple people are moving around in the scene, which usually contains static occluders (for example: furniture for indoor scene and lamp posts, trees, etc. for outdoor scene), a person may sometimes be occluded by another person(s) or object(s) in the scene in a particular camera view. When an observation of a target from a particular view is not good enough for tracking due to either type of occlusions, the camera makes inquiry if one or more other cameras have better observation of the target. If there exists one or more other cameras which have no/lesser occlusion, the camera asks these cameras for assistance in tracking of a target until occlusion in its view is less severe or over.

![]()

Figure 1: Tracking people using two assisting cameras

Contact: dr.ir. Nyan Bo Bo

References:

- N. Bo Bo, F. Deboeverie, P. Veelaert, W. Philips, "Multiple People Tracking in Smart Camera Networks By Greedy Joint-Likelihood Maximization", in 11th International Conference on Computer Vision Theory and Applications (VISAPP2016)

Distributed Camera Networks

For many purposes, the deployment of a camera network provides substantial advantages over a single fixed viewpoint camera. E.g. in scene monitoring, camera networks can alleviate occlusion problems ; in gesture recognition, cues coming from different viewpoints can lead to a more robust decision. Camera networks also entail substantial challenges concerning the processing and/or storage of the large amounts of data they produce. The purpose of this research is to develop algorithms that exploit the additional information available in the network, while simultaneously keeping the increased data amounts under control. A distributed algorithm architecture is key in this respect, as this allows to reduce the video data at the camera nodes themselves, prior to storage and/or transmission over the network. The recently introduced "smart cameras" have the necessary image processing and communication hardware on board to tackle this task.

The research in our group has so far focused on distributed viewpoint selection in camera networks. More information and demos can be found here

Contact: Prof.dr.ir.Wilfried Philips

References:

L. Tessens, M. Morbee, H. Lee, W. Philips, H. Aghajan, "Principal view determination for camera selection in distributed smart camera networks", 2008 2nd ACM/IEEE International Conference on Distributed Smart Cameras, ICDSC, September, Stanford, USA, p.1-10, (2008), [PDF]

M. Morbee, L. Tessens, H. Lee, W. Philips, and H. Aghajan, "Optimal camera selection in vision networks for shape approximation", in Proceedings of IEEE International Workshop on Multimedia Signal Processing (MMSP), Cairns, Queensland, Australia, Oktober 2008, pp. 46-51. [PDF]

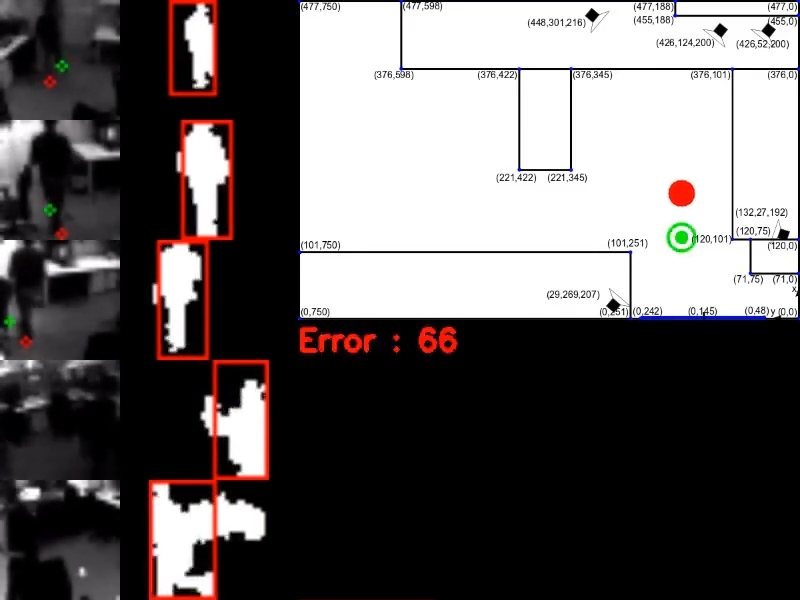

Multi Camera Networks

In e-health care projects such as the LittleSister project and the Sonopa project we use extremely low-resolution visual sensors of 30 by 30 pixels to improve the quality of life of elderly people in their homes. The research focuses on long-term behavior analysis, such as the detection of decreased mobility. The video shows the grayscale images of 5 sensors, the corresponding foreground masks and a floor map with the position estimates from tracking based on likelihood estimation (red dot) and the ground truth position estimates from ultra-wideband data (green dot).

Contact: prof.dr.ir. Richard Kleihorst

References: Little sister project home page Sonopa project home page

3D reconstruction using multiple cameras

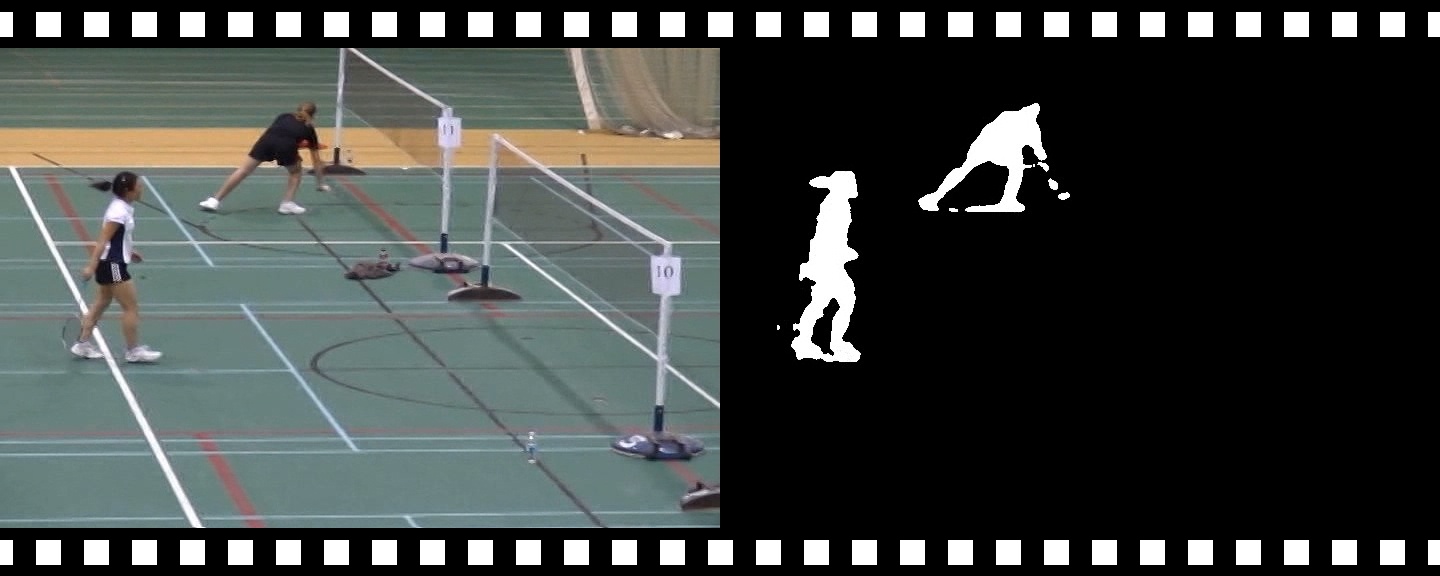

The goal of this research is to reconstruct dynamic 3D objects from multiple camera views. First, each of the cameras searches for movement by the means of state-of-the-art foreground/background segmentation techniques. The silhouettes that are generated by these algoritmes are then used in a shape-from-silhouettes algorithm to generate a 3D reconstruction of the movement, e.g. the person in the example. From the 3D reconstruction we are able to extract the centroid position of time and perform a step length analysis. We are currently experimenting with space carving to add texture to the 3D reconstruction to enhance it and make it more visually appealing. Also some form of skeletonisation is still in the pipeline. Besides this, we also built a framework for occlusion handling where occlusion is automatically detected and solved by using inconsistencies between different camera views.

References:

Slembrouck, M., Niño Castañeda, J., Allebosch, G., Van Cauwelaert, D., Veelaert, P., & Philips, W. (2015). High performance multi-camera tracking using shapes-from-silhouettes and occlusion removal. Proceedings of the 9th International Conference on Distributed Smart Cameras (pp. 44–49). Presented at the 9th International Conference on Distributed Smart Cameras (ICDSC 2015), New York, NY, USA: ACM.

Slembrouck, M., Van Cauwelaert, D., Veelaert, P., & Philips, W. (2015). Shape-from-silhouettes algorithm with built-in occlusion detection and removal. International Conference on Computer Vision Theory and Applications, Proceedings. Presented at the International Conference on Computer Vision Theory and Applications (VISAPP 2015), SCITEPRESS.

Contact: ir. Maarten Slembrouck

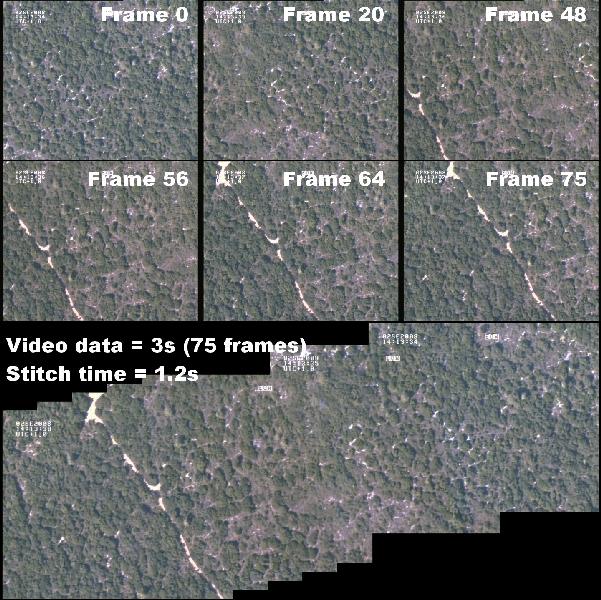

Real-time video mosaicking

Disasters like forest fires require a fast terrain overview. Our technique takes streaming video data taken from aboard a plane as input and stitches the images together in real-time, using mutual information as the similarity or matching criterion. We achieve real-time speeds by dynamically selecting good frames to stitch, based on a quality measure.

Contact: dr.ir. Hiep Luong

References: - K. Douterloigne, S. Gautama, W. Philips, "Real-time UAV Image and Video Registration for Disaster Management using Speed Up Mutual Information", Proc. of the International Symposium on Light Weight Unmanned Aerial Vehicle Systems and Subsystems, March 2009, Oostende, Belgium

Foreground background segmentation for dynamic camera viewpoints

In video sequences, interesting objects can be separated from the background by comparing the current input to a background model. As soon as the camera viewpoint changes, this comparison step becomes more difficult, since the input frame needs to be mapped to the correct position in the model, and vice versa. Our algorithm is able to handle various scenarios, such as jittery, purely panning and simultaneously panning and tilting cameras. This is demonstrated in the following examples:

Figure 1: Foreground background segmentation in the presence of strong jitter

Figure 1: Foreground background segmentation in the presence of strong jitter

Figure 2: Foreground background segmentation in the presence of panning

Figure 2: Foreground background segmentation in the presence of panning

Figure 3: Foreground background segmentation in the presence of panning and tilting

Figure 3: Foreground background segmentation in the presence of panning and tilting

Contact: ing. Gianni Allebosch

References:

Allebosch, G., Van Hamme, D., Deboeverie, F., Veelaert, P., & Philips, W. (2016). C-EFIC: Color and edge based foreground background segmentation with interior classification. In J. Braz, J. Pettré, P. Richard, L. Linsen, S. Battiato, & F. Imai (Eds.), Communications in Computer and Information Science (Vol. 598, pp. 433–454). Presented at the 10th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP), Cham, Switzerland: Springer.

Allebosch, G., Deboeverie, F., Veelaert, P., & Philips, W. (2015). EFIC: edge based foreground background segmentation and interior classification for dynamic camera viewpoints. In S. Battiato, J. Blanc-Talon, G. Gallo, W. Philips, D. Popescu, & P. Scheunders (Eds.), Lecture Notes in Computer Science (Vol. 9386, pp. 130–141). Presented at the 16th International Conference on Advanced Concepts for Intelligent Vision Systems (ACIVS), Cham, Switzerland: Springer.

Allebosch, G., Van Hamme, D., Deboeverie, F., Veelaert, P., & Philips, W. (2015). Edge based foreground background estimation with interior/exterior classification. In J. Braz, S. Battiato, & F. Imai (Eds.), Proceedings of the 10th International Conference on Computer Vision Theory and Applications (Vol. 3, pp. 369–375). Presented at the 10th International Conference on Vision Theory and Applications (VISAPP 2015), Portugal: SCITEPRESS – Science and Technology Publications.

Foreground/background segmentation

Foreground/background segmentation is a crucial pre-processing step in many applications, aimed at the separation of moving objects (the foreground) from a background scene. Many techniques use this operation as part of their work flow. For instance, tracking algorithms may focus on foreground regions to detect moving objects (and therefore speed up object-matching), or to track objects in space and time. Humans are able to easily distinguish moving objects from a background scene, but that remains one of the most challenging tasks in the field of computer vision despite many available techniques to detect moving objects in indoor and outdoor scenes. The following properties are usually expected from a detection algorithm: accurate detection of moving objects (in space and time), robustness to changing environmental condition, especially changes in illumination, real-time processing, and low latency. The last two properties are essential for tracking applications. More specifically, the real-time requirement, as explained above, is needed to achieve a reasonable tracking performance, since tracking approaches perform best or at least benefit when they operate on the camera frame rate.

Foreground/background detection on a sequence with global and local lighting changes We propose two approaches. The main idea is to apply a decision-tree-like approach to foreground/background segmentation, i.e. we calculate statistical measures at each node of the tree to classify a pixel either as foreground or as background pixel. As statistical background model, we use a long- and a short-term weighted average, based on different learning factors. In the proposed approaches, we use either image gradients or image intensities as statistical features of foreground and background regions. This research has been published at Advanced Concepts for Intelligent Vision Systems (ACIVS).

Foreground/background detection on a sequence with global and local lighting changes

References:

- Grünwedel, S., Van Hese, P., & Philips, W. (2011). An edge-based approach for robust foreground detection. In Jacques Blanc-Talon, R. Kleihorst, W. Philips, D. Popescu, & P. Scheunders (Eds.), LECTURE NOTES IN COMPUTER SCIENCE (Vol. 6915, pp. 554–565). Presented at the 13th International Conference on Advanced Concepts for Intelligent Vision Systems (ACIVS)/ACM/IEEE International Conference on Distributed Smart Cameras, Berlin Contact: ing. Gianni Allebosch

Automatic analysis of the worker's behaviour

In this section we demonstrate automatic analysis of behavior of the worker at the production line. More precise, we investigate variations of different working cycles, worker's learning speed for new sequences and picking pattern. Besides these operations we perform automatic classification of working cycles to a number of classes, i.e. assembly operations. Our main goal is to optimize the working place using the information we have obtained through the analysis without having to displace the worker.

Contact: ir. Maarten Slembrouck

References:

- Slembrouck, M., Van Cauwelaert, D., Van Hamme, D., Van Haerenborgh, D., Van Hese, P., Veelaert, P., & Philips, W., "Self-learning voxel-based multi-camera occlusion maps for 3D reconstruction",In Proceedings of the 9th International Conference on Computer Vision Theory and Applications, Lisbon, Portugal, pp. 502-510, 2014. (ISBN:978-989-758-004-8)

Gesture recognition

Real-time hand tracking and hand detection remains a challening task to perform by modern human-computer interfaces. Our research focuses on real-time hand tracking and hand detection in monocular video. Our algorithms can detect hands in any pose and can automatically initialize a particle filter based tracking solution, while taking care of error detection and recovery. The tracking algorithm operates in real-time and tracks hand in unconstrained environments and illumination conditions. A closed-loop system allows the solution to automatically learn from previous mistakes and to adapt to its environment.

Contact: prof.dr.ir.Wilfried Philps

References:

- Spruyt, V., Ledda, A. and Philips,W., "Real-time, long-term hand tracking with unsupervised initialization", Proceedings of the IEEE International Conference on Image Processing.

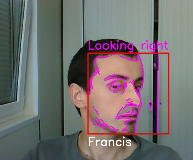

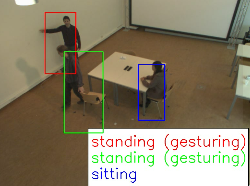

Behavior analysis

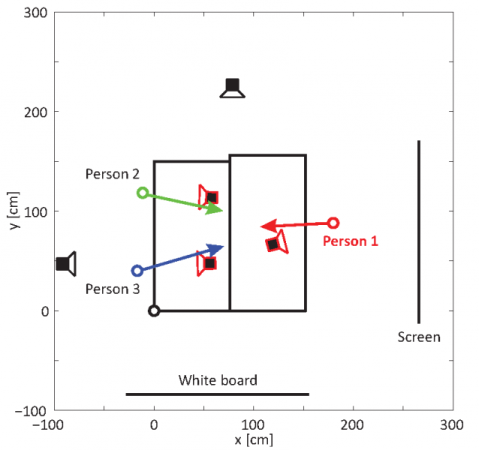

Human activity analysis is an important part of ambient intelligence and computer vision. Its goal is to automatically analyze ongoing activities from one or multiple unknown video streams, which can then be correctly classified into a set of activities. Many applications are already available to support people in carrying out their everyday life activities and tasks, such as automatic light control, elderly care, meeting analysis (smart meetings), etc. In this context, making use of low-level data, such as positional data for each meeting attendant or detailed face analysis, could help high-level analysis to understand, describe and explore the dynamics in meetings (smart meetings). Here, activities range from events, like "who is talking'' or "who is looking at who'' to more complex ones such as "who is the main speaker'', "who is paying attention in the meeting, who does not'', ... However, the detection of such activities is still very challenging. Low-level data can be corrupted or imprecise due to environmental changes and inherent errors of the employed algorithms. Therefore, low-level data cannot be assumed to be correct or very precise at all times.

In this research, we focus on an approach to understand the dynamics in meetings using a multi-camera setup, consisting of fixed ambient and portable close-up cameras. Here, fixed ambient cameras are used, for example, to track meeting attendees or to observe a certain area in the meeting such as a white board or a screen. On the contrary, portable close-up cameras, such as laptop cameras, usually have only one specific participant in the field of view. These cameras can be used for detailed face analysis, but are also susceptible to small movements and therefore an on-line calibration process is needed.

References:

Sebastian Grünwedel, Multi-camera cooperative scene interpretation, PhD Thesis, 2013 Contact: ir.Dimitri Van Cauwelaert

Immersive Communication by means of Computer vision - IBBT (now imec) project iCoCoon

The goal of this project is to drastically change the way people communicate remotely. The project targets the development of (third-generation) video conferencing applications which provide an immersive communication sensation.

We developed real-time methods to analyze the scene and human behavior in each meeting room. Using a distributed smart camera network, in each meeting room we:

[demo video; 7.8 Mb] | keep track of the position (trajectory) of each participant [1]; | ||

[demo video; 3.3 Mb] | recognize participants via face recognition [2]; | ||

| detect visual clues for communication control, i.e., detect raising hand gestures [3], detect speakers, detect head movements (head shaking, nodding, gaze direction); | ||

[demo video; 5.7 Mb] | analyze the behavior of each participant (walking, standing, sitting); | ||

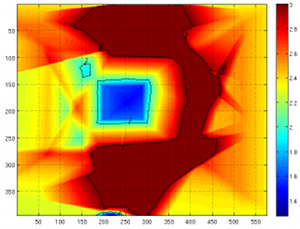

Density map of tracks: red: high people passage (so probably no furniture); blue: no people passing (so probably table) | determine positions of chairs and table from the estimated tracks of the participants [4]. |

References:

Sebastian Gruenwedel, Vedran Jelaca, Jorge Oswaldo Niño-Castañeda, Peter Van Hese, Dimitri Van Cauwelaert, Peter Veelaert and Wilfried Philips:”Decentralized tracking of humans using a camera network”, Proc. of SPIE Electronic Imaging 2012

Francis Deboeverie, Peter Veelaert, Kristof Teelen, Wilfried Philips, "Face Recognition using Parabola Edge Map", Proc. of Advanced Concepts for Intelligent Vision Systems, vol. 5259, pp. 1079-1088, 2008

Nyan Bo Bo, Peter Van Hese, Dimitri Van Cauwelaert, Wilfried Philips, "Robust Raising-Hand Gesture Detector for Smart Meeting Room", ICIP 2012, submitted

Xingzhe Xie, Sebastian Gruenwedel, Vedran Jelaca, Jorge Oswaldo Nino Castaneda, Dirk Van Haerenborgh, Dimitri Van Cauwelaert, Peter Van Hese, Wilfried Philips, Hamid Aghajan, “Learning about Objects in the Environment from People Trajectories”, ICIP 2012, submitted Back to the top

Material Analysis using Image Processing

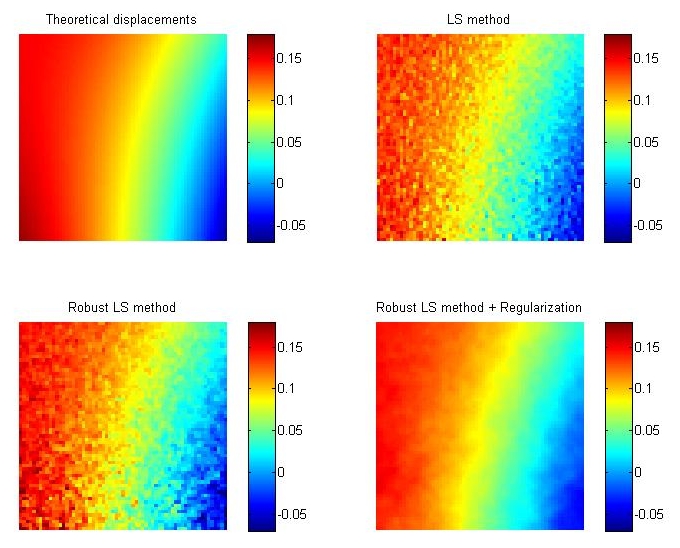

Displacement and strain analysis of speckle painted materials under mechanical stress using sub-pixel motion estimation techniques (multi-resolution robust and regularized optical flow frameworks), generation of dense artificial motion fields on sparse adaptive grids and image warping using radial basis function interpolation, post-processing of motion estimation results through spatio-temporal filtering.

Contact: dr.ir.Jan Aelterman