Topics

- High Dynamic Range imaging

- Denoising of time-of-flight depth images and sequences

- Multicamera image fusion

- Non-local image reconstruction

- Multiframe superresolution

- Demosaicing

- Error Concealment

- Wavelet-based denoising of images

- Non-local means denoising of images

- Restoration of historical videos

- Joint removal of blocking artifacts and resolution enhancement

High Dynamic Range Imaging

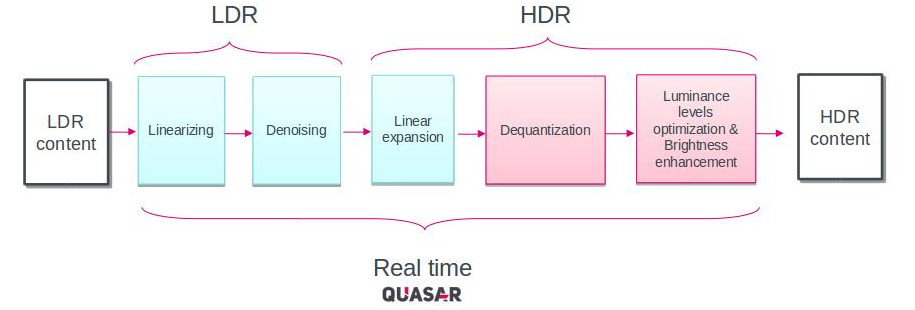

Conventional display and image capture technology is limited to a narrow range of luminosities and images associated with such systems have been retroactively called Low Dynamic Range images (LDR). A High dynamic Range (HDR) image on the other hand, refers to an image that encodes a greater range of brightness and luminosity than a reference LDR image. HDR images find a use in near-future, more capable display and camera systems. They allow to more faithfully match the visual impression of a scene to human vision compared to LDR images. Recent developments in so-called HDR televisions have yielded prototypes that can display peak luminosities in the range of 6000 nits. It is expected that in the upcoming years, Intermediate Dynamic Ranges (IDR) displays that support luminance ranges between 1000 and 4000 nits, will become available to the consumer market at reasonable prices. These recent developments have opened up the possibility to improve the visual experience of watching a movie. Unfortunately, this reveals the elephant in the room: nearly all movie content in movie history is recorded and/or stored in LDR formats. To display these on an HDR display system requires a LDR-to-HDR conversion step, a so-called Inverse Tone Mapping technique. Our approach for Inverse Tone Mapping is specifically conceived to expand LDR video streams to HDR. Our technique focuses on combining real-time processing with powerful artifact suppression and includes a technique to recover high-brightness areas lost in the SDR storage. The real-time processing makes use of GPU acceleration that is made possible by the fast prototyping of the Quasar programming language. The algorithm pipeline is shown in Figure 1.

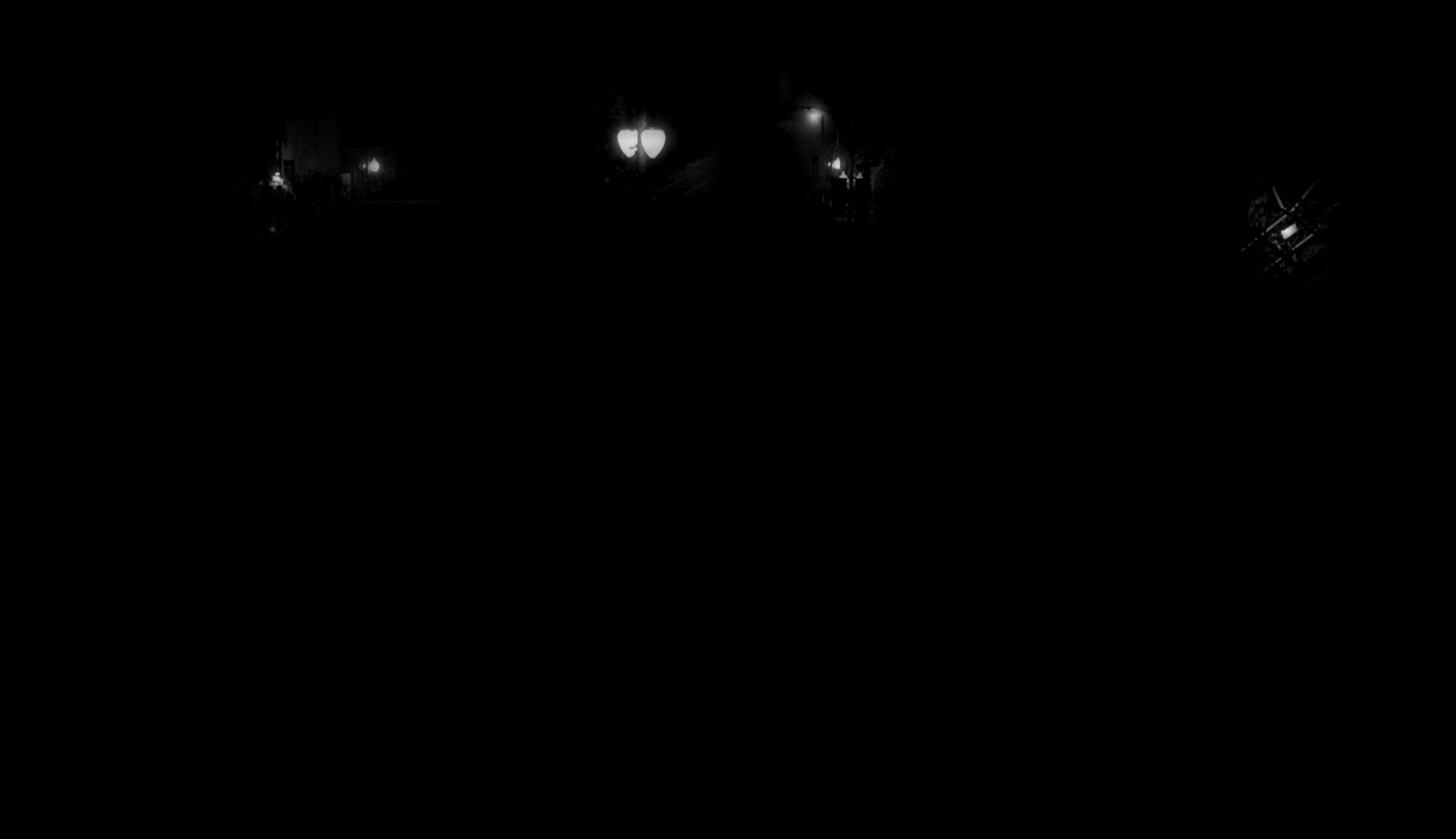

The following pictures show some results of applying our method. All the images were modified for better viewing on an LDR display.

Our approach identify those regions with high luminance levels that will be boosted until the maximum luminance support by the HDR display. The expansion map will incorporate a preliminary classification to distinguish truly bright spots (that were compressed in the SDR format) from lower-brightness spots that were mapped to the same value in the SDR format.

Figure 1. LDR to HDR conversion pipeline.

Figure 1. LDR to HDR conversion pipeline.

|

|

|

|

|

|

|

|

Our approach identifies those regions with high luminance levels that will be boosted until the maximum luminance support by the HDR display. The expansion map will incorporate a preliminary classification to distinguish truly bright spots (that were compressed in the SDR format) from lower-brightness spots that were mapped to the same value in the SDR format.

Contact: dr.ir. Jan Aelterman

Denoising of time-of-flight depth images and sequences

Recent techniques for 3D imaging based on the Time-of-flight principle are gaining in popularity as a technology that will be used for 3D capture in future 3D TV systems [18], for 3D biometric authentication, 3D human-machine interaction tool etc. TOF sensors are based on measuring the time that the light emitted by an illumination source requires to travel to an object and the back to the detector. Due to limited optical power of the light source, the depth images are rather noisy and therefore of relatively poor distance measuring error.

We have developed wavelet based methods for the depth image denosing which utilize luminance images to determine the power of the optical signal reflected from the scene and hence the signal-to-noise ratio for every pixel in the depth image. Moreover we use luminance information to better restore object boundaries masked with noise in the depth images. In particular, we take the correlation between the measured depth and luminance into account, and the fact that edges present in the depth image are likely to occur in the luminance image as well.

Sequences of depth images contain large temporal redundancy, which can be exploited for temporal noise filtering. We have proposed a method which performs motion compensated temporal filtering of depth video sequences. The proposed multi-frame motion estimation relies on information from both the depth and the luminance to find the most similar segments in neighboring frames. Motion compensated temporal filtering is depends on reliabilities of the estimated motion and locally estimated noise variances.

Noisy depth image |

Result of denoising |

References: Lj. Jovanov, A. Pizurica, and W. Philips: "Denoising Algorithm for the 3D Depth Map Sequences Based on Multihypothesis Motion Estimation", EURASIP Journal on Advances in Signal Processing, 2011, 2011:131 Lj. Jovanov, A. Pizurica, and W. Philips, ''Fuzzy logic-based approach to wavelet denoising of 3D images produced by time-of-flight cameras'', Optics Express, Volume 18, Issue 22, October 2010, pp. 22651–22676

Contact: dr. ir. Ljubomir Jovanov

Multicamera image fusion

Images captured using multiple discrete cameras contain additional information about the scene. However, due to the different viewpoints of these cameras, different camera settings like focus, exposure time, gain, color temperature and different artifacts like noise using this information, creation of more informative fused, hybrid image is often highly complex. In order to solve this problem, we propose a method for artificial view synthesis, based on two or more video streams, captured using multiview camera system. Here we propose an algorithm which synthesizes novel views based on multiple differently focused, noisy and color mismatched images.

|

References: L. Jovanov, H. Luong, and W. Philips, “Multiview Video Quality Enhancement.”, Journal of Electronic Imaging, vol. 25, no. 1.

Contact: dr. ir. Ljubomir Jovanov

Non-local image reconstruction

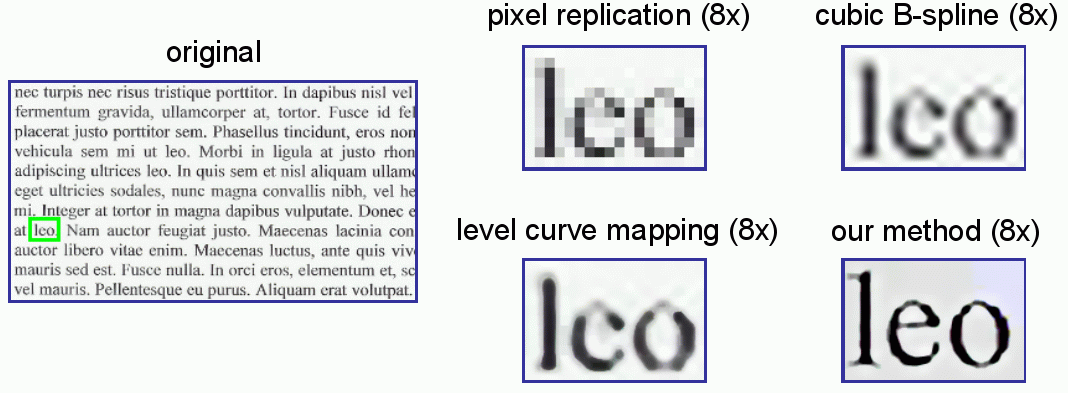

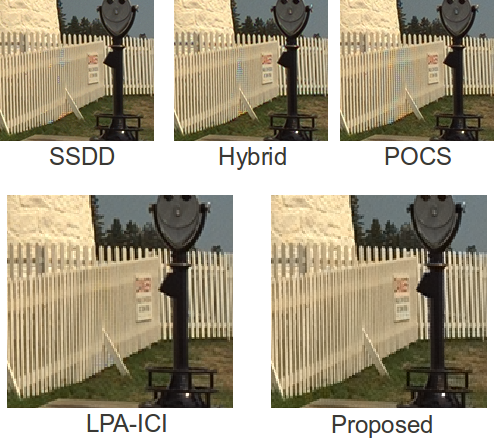

This technique involves upscaling of low-resolution images by using a local form of superresolution. Instead of combining multiple low resolution images, information from similar local patches of the same image are used and combined to improve local resolutuion. In the example below, we compare our technique with some competing techniques for image upscaling.

References: H.Q. Luong, A. Ledda, W. Philips, "Non-Local Interpolation", in Proc. IEEE International Conference On Image Processing, pp. 693-696, Atlanta, USA, October 8-11, 2006. H.Q. Luong, A. Ledda, W. Philips, "An Image Interpolation Scheme For Repetitive Structures", in Lecture Notes in Computer Science 4141 (International Conference On Image Analysis And Recognition), pp. 104-115, Povoa De Varzim, Portugal, September 18-20, 2006.

Contact: dr. ir. Hiep Quang Luong

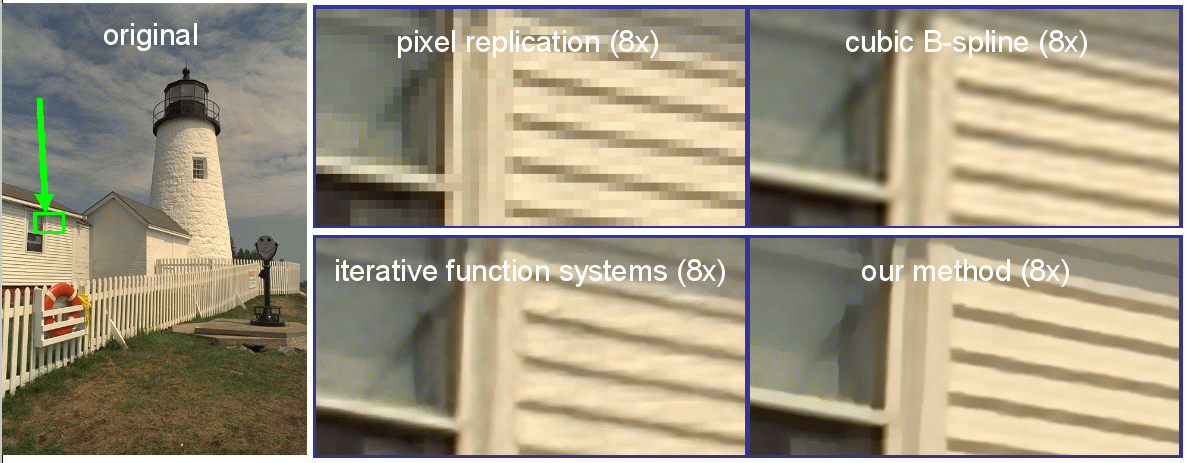

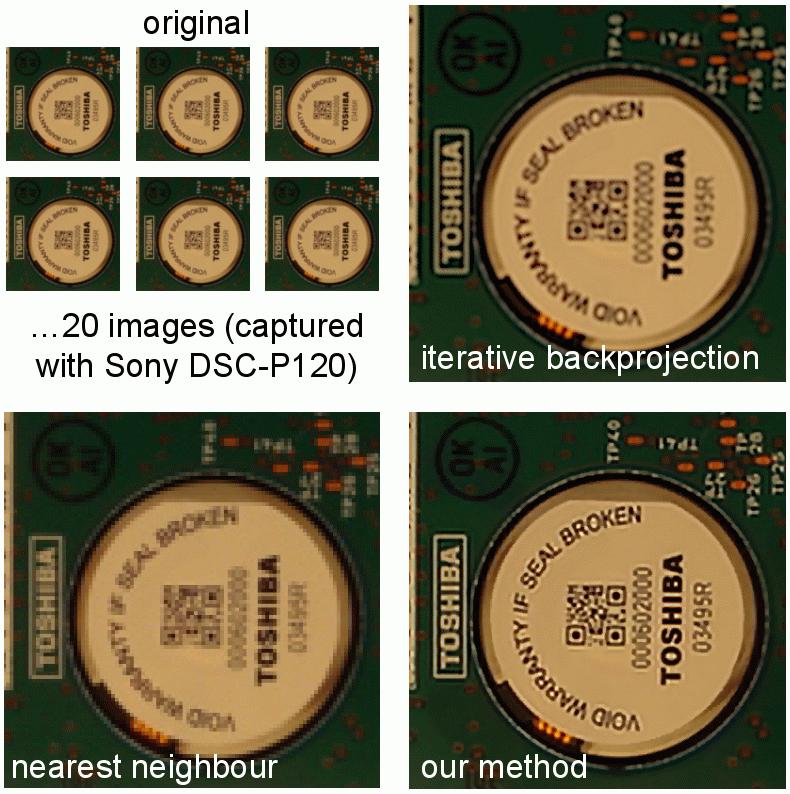

Multiframe superresolution

Multi-frame super resolution is actually a combination of motion estimation, data fusion, denoising and deblurring. We have developed a very fast technique and a real super resolution result is shown here (at the left one of the 20 original pictures taken with Sony DSC-P120 digital camera and at the right our result):

References: H.Q. Luong, S. Lippens, W. Philips, "Practical and Robust Super Resolution Using Anisotropic Diffusion For Under-Determined Cases", in Proc. 2nd annual IEEE BENELUX/DSP Valley Signal Processing Symposium (SPS-DARTS 2006), pp. 139-142, Antwerp, Belgium, March 28-29, 2006.

Contact: dr. ir. Hiep Quang Luong

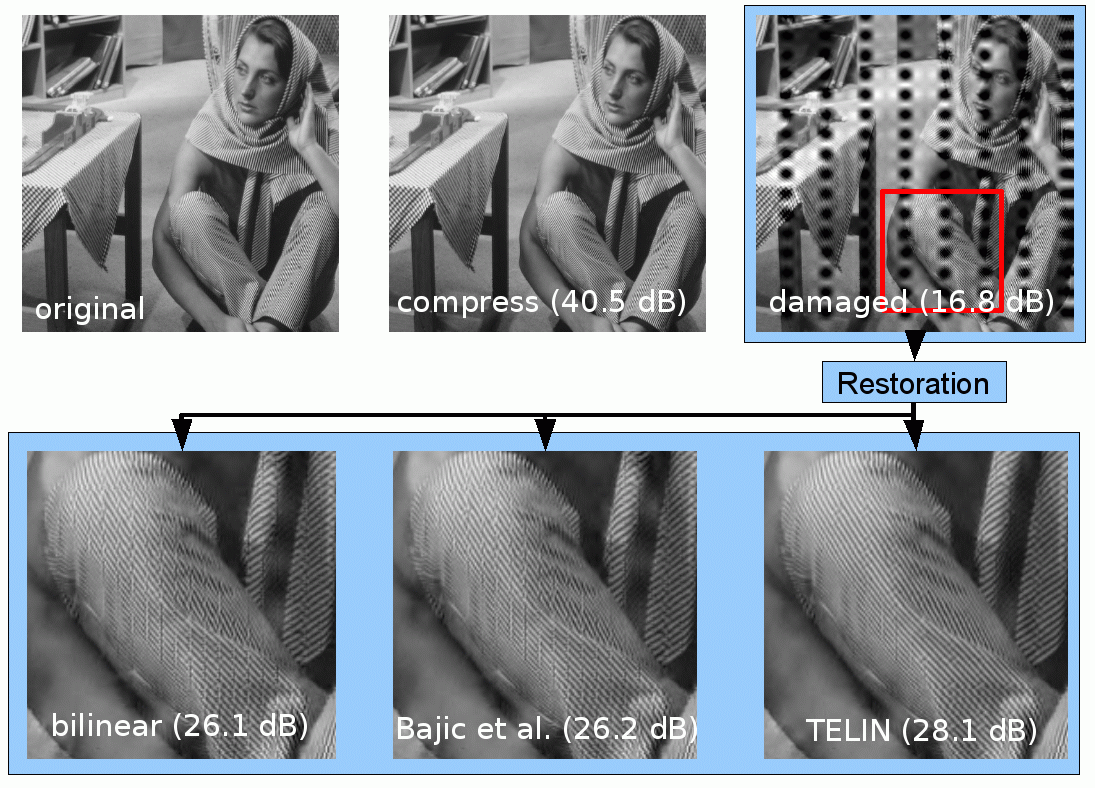

Error Concealment

This technique deals with reconstruction of data that was lost during transmission of images over a network where data packets are lost. In the example below, we see the original image top left. Top middle shows the compressed image ready for transmission. Top right, we simulate the damaged image after transmission. The bottom row shows results of techniques to reconstruct the lost data inside the red square of the top right image.

References: J. Rombaut, A. Pizurica, and W. Philips, "Locally adaptive passive error concealment for wavelet coded images," IEEE Signal Processing Letters, vol. 15, pp. 178-181, 2008.

Contact: dr.ir. Jan Aelterman

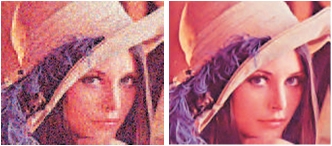

Demosaicing

Most digital cameras capture per pixel only one color (red, green or blue), the so-called Bayer pattern. Interpolation to obtain a full colour image sometimes results in errors (orange-blue artifacts in the fence and walls). Our method significantly reduces these artifacts.

References: J. Aelterman, B. Goossens, H. Q. Luong, A. Pizurica and W. Philips, "Locally adaptive complex wavelet-based demosaicing for color filter array images, " SPIE Electronic Imaging 2009, San Jose, CA, USA, 18-22 Jan. 2009

Contact: dr.ir. Jan Aelterman

Wavelet-based denoising of images

Wavelet domain locally adaptive image denoising method ProbShrink, where each coefficient is shrunk according to the probability that it presents a signal of interest, given a scalar measurement called local spatial activity indicator or given a vector of the surrounding coefficients.

References: B. Goossens, A. Pizurica and W. Philips, "Removal of Correlated Noise by Modeling the Signal of Interest in the Wavelet Domain," IEEE Transactions on Image Processing, June 2009, vol 18, nr. 6, pp 1153 - 1165 A. Pizurica and W. Philips, "Estimating the probability of the presence of a signal of interest in multiresolution single- and multiband image denoising ", IEEE Transactions on Image Processing, vol. 15, no. 3, pp. 654-665, March 2006. B. Goossens, A. Pizurica, and W. Philips, "Removal of correlated noise by modeling spatial correlations and interscale dependencies in the complex wavelet domain," n Proceedings of IEEE's 14th International Conference on Image Processing (ICIP), San Antonio, Texas, USA, 16-19 September, 2007. San Antonio, Texas, USA, 16-19 September 2007. A. Pizurica, W. Philips, I. Lemahieu and M. Acheroy, "A Joint Inter- and Intrascale Statistical Model for Bayesian Wavelet Based Image Denoising," IEEE Transactions on Image Processing, vol. 11, no. 5, pp. 545--557, May 2002.

Contact: dr. ir. Bart Goossens and

[dr. ir. Aleksandra Pizurica](mailto:aleksandra.pizurica@ugent.be)

Non-local means denoising of images

Similar to Non-local image reconstruction, this technique exploits image self-similarity: in different areas, we find similar structures in the image, from which information is obtained to denoise a certain area in the image.

References:

B. Goossens, H.Q. Luong, A. Pizurica, W. Philips, "An improved non-local means algorithm for image denoising," in 2008 International Workshop on Local and Non-Local Approximation in Image Processing (LNLA2008), Lausanne, Switzerland, Aug. 25-29 (invited paper)

Contact: dr. ir. Bart Goossens

Restoration of historical videos

These specific videos show certain degradations typical for historical data, but for which standard video reconstruction algorithms are lacking. We provide dedicated algorithms for the removal of shocking motion, i.e. video stabilization, scratch removal, noise reduction, etc.

Contact: dr.ir. Hiep Luong

Joint removal of blocking artifacts and resolution enhancement

Recently video streaming became one of the fastest growing sources of Internet traffic. In order to satisfy bandwidth constraints, video sequences sometimes have to be heavily compressed, which often results in blocking artifacts. We have developed a number of algorithm which simultaneously reduce these artifacts and increase the resolution of video sequences. Thanks to these improvements it is possible to e.g. view Youtube videos on bigger HD displays.

Contact: dr.ir. Hiep Luong